If you have ever had a chance to discuss software performance with your engineering colleagues, you’ve probably noticed that a lot of us like to throw around this infamous “Big O” to explain why something is running slow (or fast).

You’ve probably heard of something like “running in polynomial time,” “exponential” or “logarithmic,” and how some of these are better than others, but you’ve probably never heard an actual explanation of why this is the case.

From my personal experience, when it comes to a discussion of algorithms, people rarely bother to explain why what it is exactly that a certain algorithm performs better than another. If I were to tell you that, when it comes to driving speed, a BMW is a lot faster than a dirt bike, you would probably notice immediately that there is a catch — the conditions under which their performance may be measured differ greatly.

The same line of logic can and should be applied when discussing algorithms — we have to consider the context before slapping on a label. But, before we dig into the actual definitions and explanations, let’s quickly go through some common misconceptions about Big O when it comes to discussing algorithm performance.

Common misconceptions

- Big O measures speed

This is the one you’re most likely to hear when discussing complexity with someone who hasn’t yet fully grasped the concepts. Most of the time, Big O cannot tell you anything about the actual running time of an algorithm.

- Big O is a statement about time

While partially correct, by definition, it’s not solely about time. Big O can be used to describe space complexity, as well as time complexity, or any other complexity, if you want.

What is Big O, actually?

From a software engineering standpoint, one definition I like to use is that Big O actually represents how fast things can get really ugly. For example, take a look at the following questions:

- How much of an increase in traffic load can my web servers take before they break down?

- How large must an HTTP request body become before my custom JSON deserializer fails?

- Why does my image processor work fine with 2KB images, but take so long to process 10KB images?

If these sorts of questions are stumping you, they just might be the kinds of problems where you need to identify the correct time complexity and find a feasible remedy. Being able to recognize potential issues with your complexity may save you hours, or even days, of needless performance monitoring and examination.

Now, let’s dig deeper and try a different definition.

Given the input of size n, Big O describes an upper bound on the number of relevant, primitive operations the algorithm needs to perform.

This is both mathematically incorrect and somewhat vague, but still a great starting point. So, let’s dive right into it, and we’ll slide into more formal definitions later.

Primitive operation

Primitive operations are basic computations performed by an algorithm. Examples are evaluating an expression, assigning a value to a variable, indexing into an array, calling a method, returning from a method, etc. They are easily identifiable in pseudocode and are largely independent from the programming language.

Let’s look at an example:

public void SimpleFunction(List<int> n)

{

int a = 1;

int b = 2;

int sum = a + b;

Console.WriteLine(sum);

}How many primitive operations do we have here?

Let’s break it down:

public void SimpleFunction(List n)

{

int a; // declaration

a = 1; // assignment

int b; // declaration

b = 2; // assignment

int sum; // declaration

sum = a + b; // two primitive operations, addition and assignment

Console.WriteLine(sum); // method call

}

So, we have three declarations, three assignments, an addition and a method call. If we agree that these are our primitive operations, we should be able to work out the following: Given the input size of n, the number of operations this function will perform is 8, or to put it more formally, f(n) = 8.

We can clearly see that the size of the input does not matter at all, and this function will always perform the same, constant number of operations.

Let’s try a different one:

public void SumFunction(List n)

{

int sum = 0;

for (int i = 0; i < n.Count; i++)

{

sum = sum + i;

}

Console.WriteLine(sum);

}

This one looks harder to break, but let’s give it a shot. One thing that will make it easier is to convert this for loop into an identical while loop.

public void SumFunction(List n)

{

int sum;

sum = 0;

int i;

i = 0;

while (i < n.Count) // n comparisons

{

sum = sum + i; // n additions and assignments

i = i + 1; // n additions and assignments

}

sum = sum + 5; // one addition and one assignment

Console.WriteLine(sum);

}

Well, obviously, at first we have 2 declarations and 2 assignments, but there is also something else. This loop will evaluate i against n exactly n times, and also perform that many additions and assignments. Additionally, we will stir this up with one more addition at the end. So, this is how we stand at the moment: 2 declarations, 2 assignments, n comparisons, n additions, n assignments, 1 addition, 1 assignment and 1 method call.

If you remember from the last definition, if we want to measure something, we need to decide which primitive operation is relevant for us. Once we’ve done that, we can disregard the rest, assuming their performance will not consume resources (time or space), and we will only focus on relevant primitive operations.

Please note that declaring what is a primitive operation greatly depends on a number of factors, like compiler implementation or processor architecture. However, for practical purposes, you can declare anything as a primitive operation based on the level of abstraction that you want to use as your point of view.

The reasonable thing for us to do would be to choose an addition as our primitive operation and see how many additions this function performs based on its input (which would be n additions plus one more at the end). We will note this down as:

f(n) = n + 1

What this means is that, given the input of size n, we will need to perform n + 1 additions. A list of 100 numbers will give us a 101 addition, and a list of 1000 numbers will give us a 1001 addition. Now, with our definition of Big O from before, we can deduce, approximately, that the SUM function from above performs about n relevant primitive operations, or that it performs in O(n) time. As you might already know, the f(n) = n + 1 is called a linear function (the value of the function increases linearly to correspond to the value of the input). Therefore, O(n), as an approximation, is called linear time.

Linear function f(n) = n + 1

But what about our previous function, f(n) = 8? Well, first of all, we need to define a relevant primitive operation for it, as well. Choosing the addition will give us f(n) = 1. In time complexity theory, this is called constant time and is written as O(1).

Let’s consider the following:

public void DoSomething(List n)

{

SimpleFunction(n);

SumFunction(n);

}

We have a function of O(1) and O(n) running one after the other. This will give us a running time of O(n) + O(1). Adding Big O approximations follows the rule where the higher-order function takes precedence, and all others are disregarded. That means that O(n+1) = O(n) and O(n) + O(1) = O(n). In the same manner, we can disregard any constants, so f(n) = 3n + 5 falls under the O(n) order. This is very important, because we need to identify the weak spot — that single function that grows the fastest and will take our systems down.

Before discussing the upper bound and other function orders, let me get back to the primitive operations. As I’ve mentioned, you can choose your own relevant primitive operations, and I’ll give you an example of what this means in practice.

Let’s take a look at this piece of code:

public void Email(List employees)

{

for (int i = 0; i < employees.Count; i++)

{

_emailService.SendHolidayNotificationEmail(employees[i].Email);

_logger.Log(“Email sent”);

}

}

We know that sending an email is going to take a lot more time than logging, and it’s reasonable for us to choose it as a primitive operation for this function. This function itself might make O(n²) different primitive operations (e.g. validation based on the length of the email), but we can abstract that away, because it isn’t a function of input in our method. Changing the size of the list of employees will not change the running time of the SendHolidayNotificationEmail method.

We can safely disregard the logging as a trivial operation (as we do in practice, most of the time). There are no hard and fast rules on what should be a primitive operation, but you should always bear in mind that you need to define one for your function before you try to measure the performance — otherwise it’s just wild guessing.

But what about the upper bound?

Consider the following piece of code:

public void FindItem(List employees)

{

string name = “”;

for (int i = 0; i < n.Count; i++)

{

if (employees[i].Name == “Mark”)

break;

}

Console.WriteLine(name);

}

What is the running time of this function?

Well, it depends on whether there is an employee with that name and where he is in the list. Declaring an array access as a primitive operation, and given the list of size n, we could perform anywhere between 1 and n array accesses. With no specific statistical distribution, we can safely assume that, on average, Mark would be found somewhere in the middle, and we would need to perform approximately n/2 array accesses to find him.

But Big O is not about average-case, it’s about worst-case, and the worst-case would be n array accesses. More specifically, saying that something performs in O(n) time means that a certain algorithm will need to perform less than or equal to n number of operations.

Order of function

There are different types of running times.

Take a look at this example:

public void Multiplies(int n)

{

for (int i = 0; i < n; i++)

{

for (int j = 0; j < n; j++)

{

Console.WriteLine(i * j);

}

}

}

If we consider writing to the console as a primitive operation, we can see that we need to perform n*n operations, or n². This is quadratic time, or O(n²).

But what about this?

public void MultipliesWithCatch(int n)

{

for (int i = 0; i < n; i++)

{

for (int j = 0; j < 5; j++) // <– only to 5

{

Console.WriteLine(i * j);

}

}

}

Is this O(n²)?

No, it’s not. Given the size of the input n, the maximum number of operations performed will be 5*n, which puts it under the O(n) order. This is very important to notice — Big O describes function growth relative to the size of the input. Changing the size of the input does not affect the number of operations in the inner loop since it will always be five. Now we’re getting somewhere!

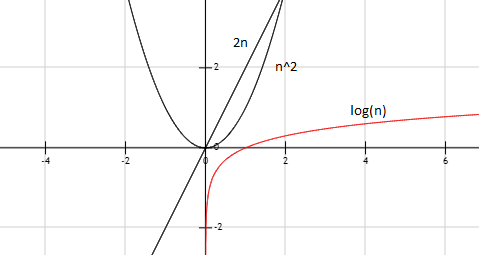

Now, there are other types of functions, so there must be other types of Big O notations, right? Cubic function would give us cubic running time, exponential functions would give us exponential running times, etc.

Different types of functions

But there are more complex functions — what about them? Well, as we have seen, the higher-order function takes precedence, and others are disregarded. So let’s assume we have measured the exact number of primitive operations that a given function can perform and we get the following result:

f(n) = n⁵ + 6n² + 5

According to the rules already mentioned, this belongs to O(n⁵). There are infinite variations, but the running time is always declared by that part of the function that grows the fastest. These are called polynomial running times, with O(n) and O(n²) as subsets. There are other types of running times, like exponential, logarithmic or factorial, and certain famous algorithms.

We will discuss other common running times that are described using Big O notation in my next post.